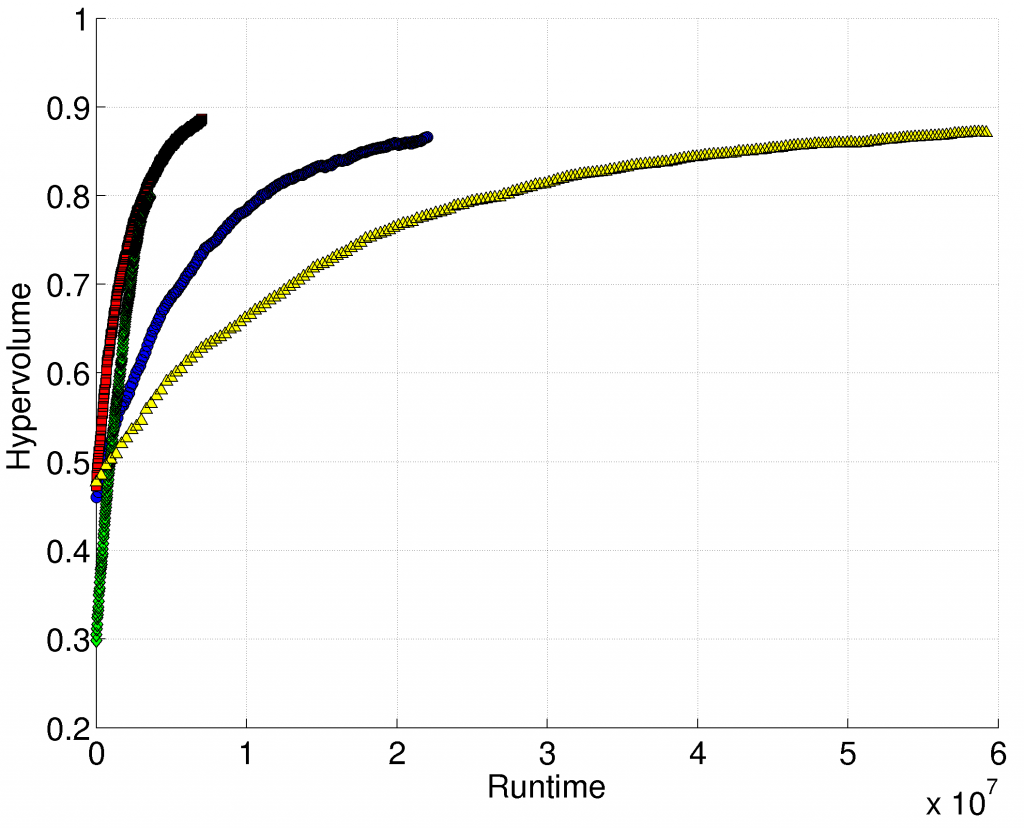

The figure shows the runtime analysis of some popular classifiers for multi-objective feature selection identifying the piano occurrence in musical intervals. In my opinion it provides some very interesting details. X axis: hypervolume (minimizes the feature number and error). Y axis: time in ms, evaluation number was limited to 2000 for all classifiers).

Support Vector Machine (yellow triangles) requires a large amount of computing time. Random Forest (red squares) is a clear winner – achieves large hypervolume and is fast. Naive Bayes (green diamonds) is the worst with regard to hypervolume but is the fastest method. Decision Tree C4.5 (blue circles) is somewhere between SVM and RF. It should be mentioned that C4.5 seems to be significantly slower than RF (which creates many trees) because of complex pruning optimization techniques. With the proper parameter tuning of SVM it could be the winner for hypervolume (here just a linear kernel was used) – but I’m not sure if the runtime efforts could be reduced very much. The method implementations were provided within RapidMiner and are based mostly on WEKA library.

At any rate I miss such analysis in many papers – if a method provides the best quality but requires weeks to run, it is not a really nice option 🙂 However such measurements are very difficult to provide – runtime depends on hardware, OS, implementation etc..